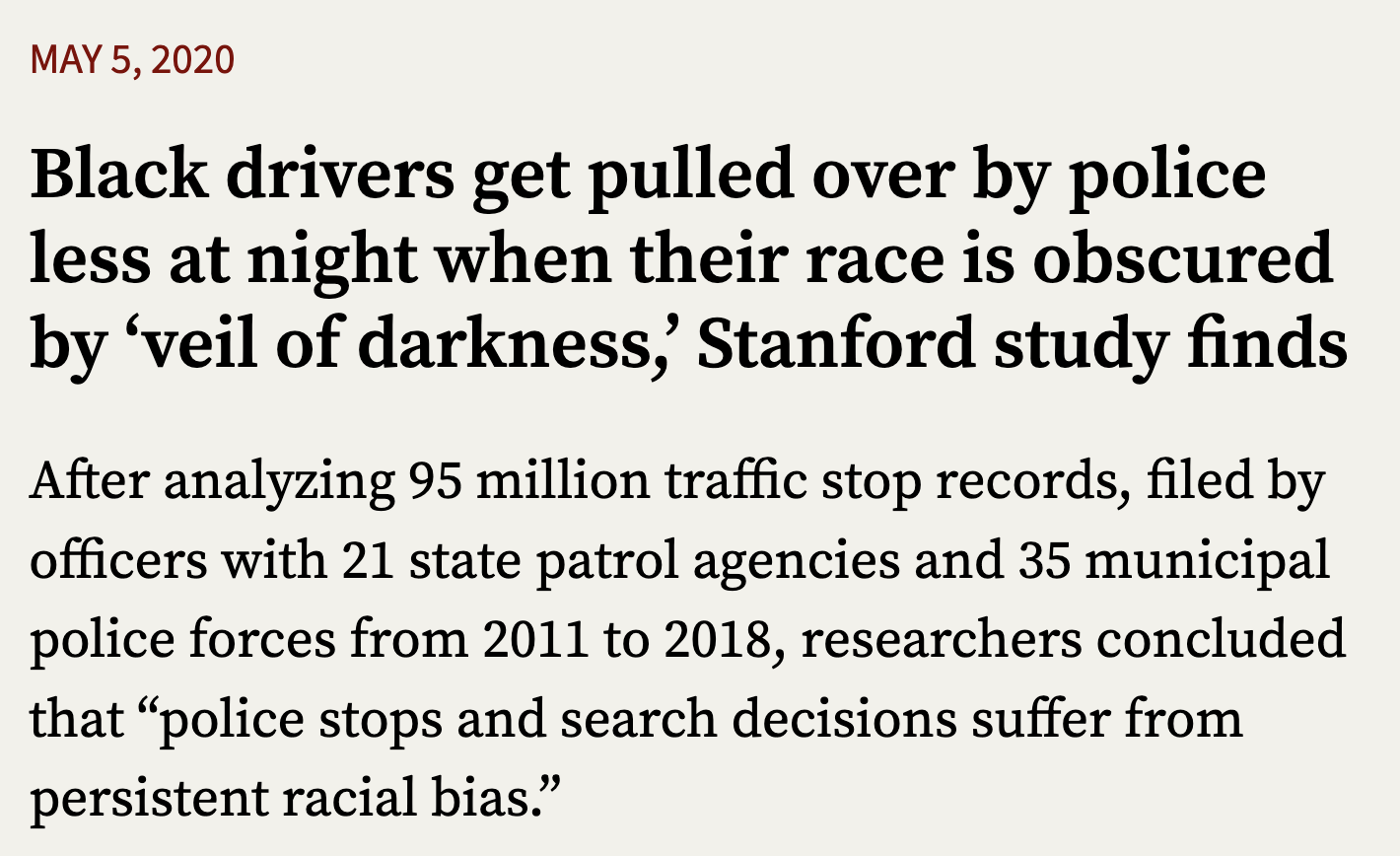

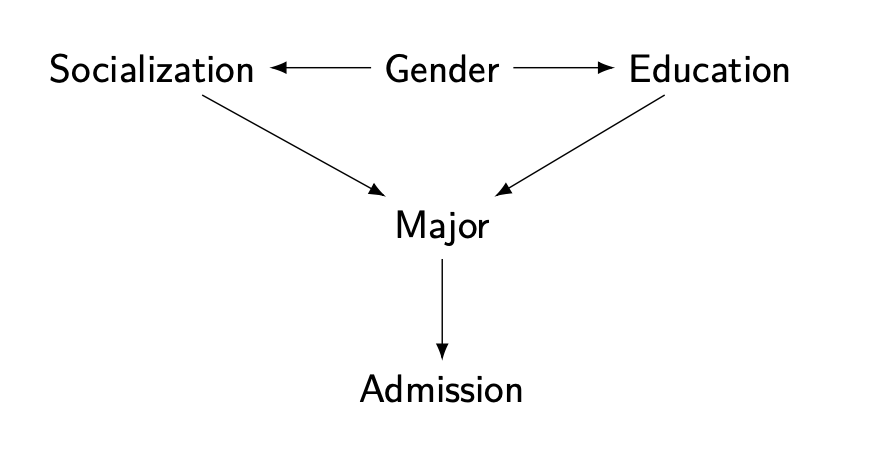

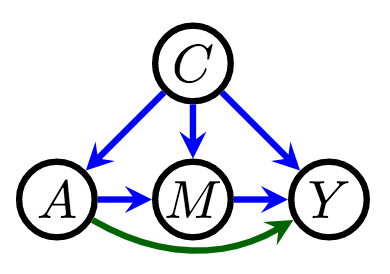

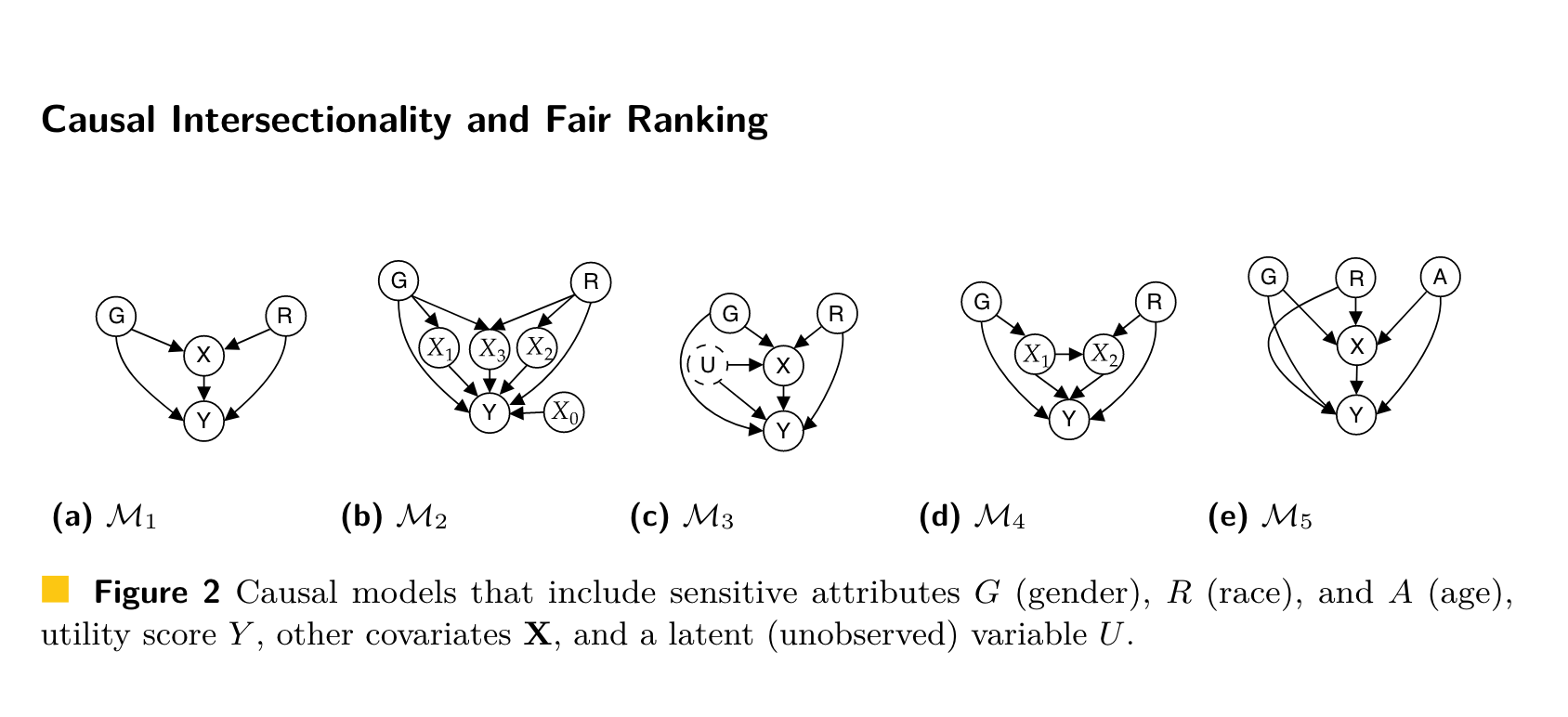

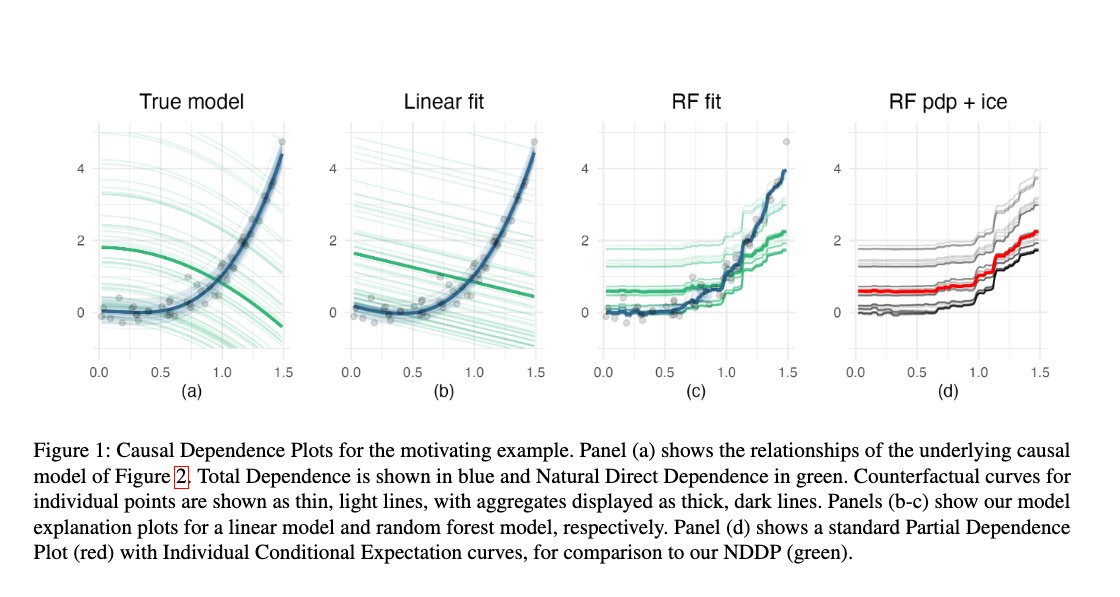

class: bottom, left, title-slide .title[ # Algorithmic fairness and causal interpretability ] .subtitle[ ## Slides: joshualoftus.com/talks.html ] .author[ ### Joshua Loftus (LSE Statistics) ] --- class: split-four <style type="text/css"> .remark-slide-content { font-size: 1.2rem; padding: 1em 4em 1em 4em; } </style> ## Outline - Unfair algorithms: examples and background - Statistical fairness - (Structural) causal models - **Counterfactual fairness** - Auditing (black-box) algorithms - Causal interpretability --- class: center, middle, inverse # Counterfactual fairness --- ### Back in 2016 I thought: seems like this is about **causality**? The "effect" of one variable, possibly "controlling for" others Holland: **no causation without manipulation** What can we manipulate? e.g. names on resumes --- Randomize (or blind) the **perception** of a sensitive variable (e.g. race) at one (late?) time point  --- ### Berkeley graduate admissions example > The bias in the aggregated data stems **not from any pattern of discrimination on the part of admissions committees**, which seem quite fair on the whole, but apparently from **prior screening at earlier levels** of the educational system. Women are shunted by their **socialization and education** toward fields of graduate study that are generally more crowded, less productive of completed degrees, and less well funded, and that frequently offer poorer professional employment prospects. From the final paragraph of Bickel et al (1975) #### What information / covariates should we condition on? --- ### Directed Acyclic Graph (DAG) models  - Assumption: paths show conditional dependence - Assumption: intervention to change one variable also affects all variables on paths away from it --- ### (Infamous?) policing example "Algorithm does not *explicitly* use race" -- in an unfair world, **other things can correlate with race**, e.g. prior convictions .pull-left[ <div id="htmlwidget-e9dffe663b32dc218980" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-e9dffe663b32dc218980">{"x":{"diagram":"\ngraph LR\n R-->M\n S-->M\n S-->P\n M-->P\n P-->C\n"},"evals":[],"jsHooks":[]}</script> ] .pull-right[ Race `\(R\)`, structural racism `\(S\)`, arrested for marijuana `\(M\)`, prior conviction `\(P\)`, risk score `\(C\)` If `\(C\)` is computed using only `\(P\)` (and not `\(R\)` *directly*), is that "fair"? ] --- ### Fairness focused on one (later?) stage - *perception* of race/gender - balance *among applicants* #### does not fix unfairness that occurred earlier **Counterfactuals** help us think about this. **Depth** of the counterfactual story (starting earlier in time and/or length of causal pathway) corresponds to more/less fairness --- ## Defining fairness causally Kusner, Loftus, Russell, Silva. *Counterfactual fairness* ([NeurIPS 2017](https://papers.nips.cc/paper/2017/hash/a486cd07e4ac3d270571622f4f316ec5-Abstract.html)): Given a DAG, the predictor `\(\hat Y\)` is **counterfactually fair** if `$$P(\hat Y_a | x, a) = P(\hat Y_{a'} |x, a)$$` #### Proposition (sufficient, not necessary): Any predictor `\(\hat Y\)` which is a function of only non-descendents of `\(A\)` in the DAG is counterfactually fair --- ### Pathway analysis / decomposition .pull-left[  - [Kilbertus et al (2017)](https://papers.nips.cc/paper/2017/hash/f5f8590cd58a54e94377e6ae2eded4d9-Abstract.html): proxies and **resolving variables** ] .pull-right[ - [Kusner et al (2017)](https://papers.nips.cc/paper/2017/hash/a486cd07e4ac3d270571622f4f316ec5-Abstract.html): path-dependent counterfactual fairness (see supplement) - [Zhang et al (2017)](https://www.ijcai.org/proceedings/2017/549) - [Nabi and Shpitser (2018)](https://ojs.aaai.org/index.php/AAAI/article/view/11553) - [Zhang and Bareinboim (2018)](https://aaai.org/ocs/index.php/AAAI/AAAI18/paper/view/16949/0) - [Chiappa (2019)](https://ojs.aaai.org//index.php/AAAI/article/view/4777) - ... ] --- ### Statistical fairness `\(\leftrightarrow\)` causal fairness? **Exercise**: Pick one or more definitions of statistical fairness and determine a DAG/SCM with a related/corresponding definition of [path-specific] counterfactual fairness -- e.g. what if `\(Y\)` is a resolving variable? --- class: split-four ### Every DAG is (importantly?) wrong .column[ <div id="htmlwidget-2aba198eb91c97a92ff4" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-2aba198eb91c97a92ff4">{"x":{"diagram":"\ngraph TB\n Race-->Outcome\n"},"evals":[],"jsHooks":[]}</script> ] .column[ <div id="htmlwidget-cbfe2617b4dbd527e052" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-cbfe2617b4dbd527e052">{"x":{"diagram":"\ngraph TB\n Racism-->Outcome\n"},"evals":[],"jsHooks":[]}</script> ] .column[ <div id="htmlwidget-59a22a001c4d95451b73" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-59a22a001c4d95451b73">{"x":{"diagram":"\ngraph TB\n A-->X\n X-->Y\n A-->Y\n"},"evals":[],"jsHooks":[]}</script> ] .column[ <div id="htmlwidget-e8243cad70c255e2466d" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-e8243cad70c255e2466d">{"x":{"diagram":"\ngraph TB\n A-->X\n A-->R\n X-->Y\n R-->Y\n A-->Y\n"},"evals":[],"jsHooks":[]}</script> ] **Transparency**: we can say specifically what we disagree on **Interpretation**: meanings of variables, edges (mechanisms) --- ## [Intersectional](https://en.wikipedia.org/wiki/Intersectionality) fairness *Causal Intersectionality and Fair Ranking* (Yang, Stoyanovich, Loftus, [FORC 2021](https://drops.dagstuhl.de/opus/portals/lipics/index.php?semnr=16187)) - Multiple sensitive attributes, e.g. race and gender - Variety of relationships with other mediating variables - Some of these mediators may be resolving/non-resolving for different sensitive attributes Lots of scholarship, not much using formal mathematical models. See [Bright et al (2016)](https://www.journals.uchicago.edu/doi/abs/10.1086/684173), [O'Connor et al (2019)](https://www.tandfonline.com/doi/abs/10.1080/02691728.2018.1555870), and a few other references in our paper --- ## "Moving company" example .pull-left[ Race, gender, weightlifting test, application score <div id="htmlwidget-62322b30683bf1b7f530" style="width:504px;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-62322b30683bf1b7f530">{"x":{"diagram":"\ngraph TB\n R-->X\n G-->X\n R-->Y\n X-->Y\n G-->Y\n"},"evals":[],"jsHooks":[]}</script> ] .pull-right[ Weightlifting considered a **resolving variable** (company argues it is a necessary qualification) <img src="../moving.png" width="100%" style="display: block; margin: auto;" /> (See paper for results) ] --- ### Untangling intersectional relationships *Race as a Bundle of Sticks* (Sen and Wasow, 2016) Causal mediation with **multiple mediators** and **multiple causes** is a hard problem, limiting realistic application until better methods/data are available  --- class: center, middle, inverse # Auditing (black-box) algorithms --- ### Classic methods (context/comparison) #### Decision trees (e.g.: early vaccine eligibility) - If `Age >= 40` then `yes`, otherwise `continue` - If `HighRisk == TRUE` then `yes`, otherwise `continue` - If `Job == CareWorker` then `yes`, otherwise `no` #### Regression - Predictor variables in the dataset: `\(x_1, x_2, \ldots, x_p\)` - Coefficients/**parameters**, unknown: `\(\beta_1, \beta_2, \ldots, \beta_p\)` - Outcome variable, also in data: `\(y\)` - Algorithm inputs the data, outputs: estimated parameters, predictions of `\(y\)` using "recipe" or "weighted" combination $$ \beta_1 x_1 + \beta_2 x_2 + \cdots + \beta_p x_p $$ --- ## Transparent models Understand how the model uses `\(x_1\)` to make a prediction... ## Opaque or black-box models Internal model structure is hidden, or complicated enough to be difficult to understand ### xAI / IML Tools to help human understanding of models Usually focused on input-output --- ## Model-agnostic interpretability If we can only access the input-output interface (e.g. external auditing of a proprietary model) Does this black-box model discriminate? How does it depend on `age`? Various IML tools: variable importance, **partial dependence plots**, local surrogates, "counterfactual" explanations (misnamed) **Problem**: In the question "how does the output depend on a given input?" *what do we mean* by "depend"? e.g. fairness and (proxies for) sensitive attributes --- class: center, middle, inverse # Causal interpretability --- ## Causal interpretability - *Causal Interpretations of Black-Box Models* (Zhao and Hastie, 2019) Conditions under which a particular IML tool (PDP/ICE plots) can give valid causal conclusions - *Causal Dependence Plots for Interpretable Machine Learning* (Loftus, Bynum, Hansen, 2023 [preprint](https://arxiv.org/abs/2303.04209)) --- ### Assume a causal model, create explanations  Checking: if explanations are absurd, *either* the black-box model *or* the auxiliary causal model has problems --- class: center, middle # Conclusion ## and takeaway messages --- ### Exciting advances in causal modeling Observational data, double machine learning, causal reinforcement learning, ... ### Applications in ethical data science Prioritizing normative values: fairness, privacy, interpretability, replicability, "alignment," ... --- ### Bet on causality Claim: **causality** points us in good directions for research - Choosing which covariates to condition on in fair prediction/decisions - Changing focus from prediction to action, interventions, policy design, etc - Making models/assumptions transparent Deirdre Mulligan at (NeurIPS 2022) causal fairness workshop: > the important role [causal models] can play in supporting **collaborative reasoning about contested concepts, facilitating stakeholder participation** in decisions about how to meet policy goals within technical systems --- ### Always question, doubt, test... Jack Schwartz in [The Pernicious Influence of Mathematics on Science](https://www.sciencedirect.com/science/article/abs/pii/S0049237X09706032): > computing machines, with a perfect lack of discrimination, will do any foolish thing they are told to do > the simple-mindedness of mathematics–its willingness, like that of a computing machine, to elaborate upon any idea, however absurd; [...] > Unfortunately however, an absurdity in uniform is far more persuasive than an absurdity unclad. The very fact that a theory appears in mathematical form, [...] somehow makes us more ready to take it seriously. --- ### What's new (or changing) with algorithms? WMD book: **scale**, opacity(?) Incontestability Scaling up data infrastructure has many benefits, but also... Surveillance by governments: [Seeing Like a State (book)](https://en.wikipedia.org/wiki/Seeing_Like_a_State) Surveillance by business: [Surveillance capitalism](https://en.wikipedia.org/wiki/Surveillance_capitalism) [Predatory inclusion](https://journals.sagepub.com/doi/pdf/10.1177/2329496516686620) (e.g. wellness app sharing [patient data](https://techcrunch.com/2023/04/04/monument-tempest-alcohol-data-breach/)) [Data colonialism](https://purdue.edu/critical-data-studies/collaborative-glossary/data-colonialism.php) --- ### Philosophy of statistics/science .pull-left[  Richard Feynman, 1974 ] .pull-right[ [Cargo cult science](https://en.wikipedia.org/wiki/Cargo_cult_science)/[statistics](https://www.significancemagazine.com/2-uncategorised/593-cargo-cult-statistics-and-scientific-crisis) [Mindless statistical inference](https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.821.8442&rep=rep1&type=pdf) *Imitate success* ] --- ### Balancing views [A. N. Whitehead](https://en.wikipedia.org/wiki/Alfred_North_Whitehead) in *An Introduction to Mathematics* (1911): > It is a profoundly erroneous truism [...] that we should *cultivate the habit of thinking of what we are doing*. The precise opposite is the case. **Civilization advances by extending the number of important operations which we can perform without thinking** about them. Operations of thought are like cavalry charges in a battle — they are strictly limited in number, they require fresh horses, and must only be made at decisive moments. Key: what does it mean *civilization* advances? Who? How? --- ### Decreasing human judgment? Flowchart version of statistics Unintuitive for many statisticians We often want "assumption lean" methods Algorithm design *can* build assumptions in to infrastructure that becomes frozen, incontestable --- ### Wrap-up: ethical data science Data and information technology are powerful tools As with other human technologies (e.g. fossil fuel energy, nuclear technology) the **consequences** of data science will depend on **who uses it** and **for what purposes** **Data scientists** do work that becomes part of our information infrastructure. Like **city planners**, our decisions can impact everyone **Professionalism**: (e.g. medicine, engineering, law) often require ethical training, license to practice --- ### First, do no harm Tradition in medicine, [Hippocratic oath]((https://en.wikipedia.org/wiki/Primum_non_nocere) Blame for harm typically does not fall on machines or algorithms, but on the people who built or used them (that could be you!) **Avoiding harm is only the beginning** of ethics (Credit for benefit of algorithms) --- ### 감사해요! Reading for a fairly general audience: The long road to fairer algorithms ([Nature, 2020](https://www.nature.com/articles/d41586-020-00274-3)) [joshualoftus.com](joshualoftus.com) slides and other info ### Parting wisdom (hard won, not by me) Remember Box's rule: what's **important**? what's **useful**? (to who?)