Human-centered data science

I believe data science should empower people with tools to guide the directions of research and technology consciously and inclusively. I’m motivated by applications in algorithmic fairness and discrimination, responsible AI, and model interpretability and explainability. My research is primarily theoretical and develops modeling frameworks based on causality, a theory of changing the world. I hope these frameworks enable clear thinking about challenging problems and promote effective communication among interdisciplinary researchers and stakeholders alike.

Some recent talks/slides. More information can be found on my Google Scholar profile page.

Causal models in machine learning

Conceptual simplicity is important in human-centered data science. Using simple causal models as hypotheses allows people to ask the questions they really care about, make their assumptions transparent, and communicate clearly to broad audiences. I argue my case for a causal revolution in data science in this recent position paper (ICML 2024).

Much of my work in this area focuses on fair machine learning and algorithmic discrimination. With the paper Counterfactual Fairness (NeurIPS 2017) my co-authors and I helped start a growing, interdisciplinary research field of causal fairness. See this Nature Comment for a high level overview.

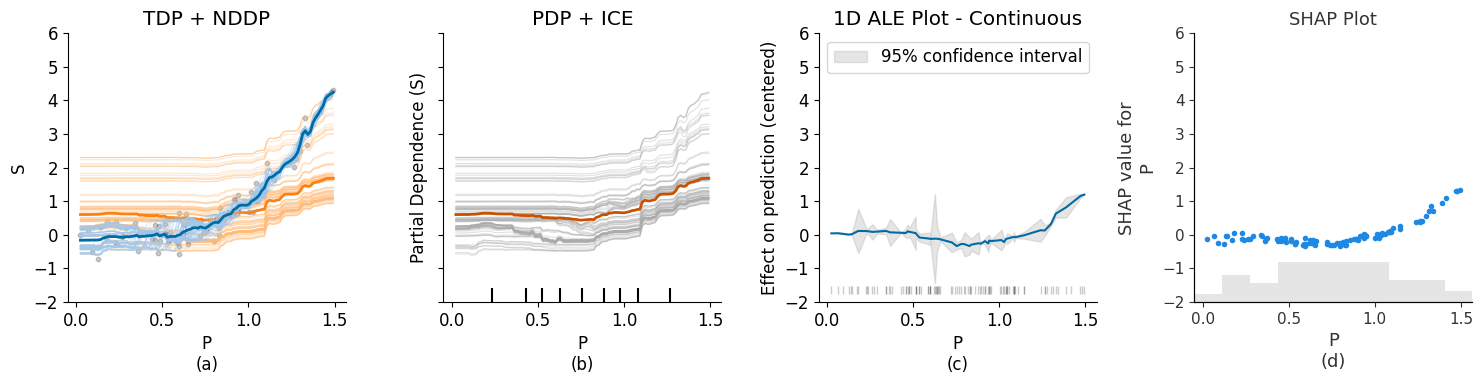

Recently I started working on causal frameworks for model interpretation and explanation. Beginning with feature dependence visualization, our paper Causal Dependence Plots (NeurIPS 2024) makes use of information about causal dependence between features when plotting predicted outputs. The figure below compares CDPs (a) with several other methods for visualizing feature dependence on a simulated data example. Notice that the blue curve in panel (a), our Total Dependence Plot, indicates a stronger increasing relationship which all the other methods fail to capture.

Publications

J. R. Loftus, L. E. J. Bynum, S. Hansen. Causal Dependence Plots. Advances in Neural Information Processing Systems, (NeurIPS 2024, accepted). [link]

L. E. J. Bynum, J. R. Loftus, J. Stoyanovich, A New Paradigm for Counterfactual Reasoning in Fairness and Recourse. Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, (IJCAI 2024). [link]

L. E. J. Bynum, J. R. Loftus, J. Stoyanovich, Counterfactuals for the Future. Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence, (AAAI 2023). [link]

L. E. J. Bynum, J. R. Loftus, J. Stoyanovich, Disaggregated Interventions to Reduce Inequality. Equity and Access in Algorithms, Mechanisms, and Optimization, (EAAMO 2021). [link]

K. Yang, J. R. Loftus, J. Stoyanovich, Causal intersectionality and fair ranking. Foundations of Responsible Computing, (FORC 2021). [link]

M. J. Kusner, C. Russell, J. R. Loftus, R. Silva. Making Decisions that Reduce Discriminatory Impact. International Conference on Machine Learning, (ICML 2019). [link]

M. J. Kusner, J. R. Loftus, C. Russell, R. Silva. Counterfactual fairness. Advances in Neural Information Processing Systems, (NeurIPS 2017). [link]

C. Russell, M. J. Kusner, J. R. Loftus, R. Silva. When worlds collide: integrating different counterfactual assumptions in fairness. Advances in Neural Information Processing Systems, (NeurIPS 2017). [link]

J. R. Loftus, C. Russell, M. J. Kusner, R. Silva. Causal reasoning for algorithmic fairness. Preprint.

Post-selection inference

This work involves challenging mathematical and computational aspects of conducting inference after model selection procedures with complicated underlying geometry. This enables significance testing, for example, after using some of the most popular model selection procedures such as the lasso with regularization chosen by cross-validation, or forward stepwise with number of steps chosen by AIC or BIC. Often the resulting significance tests are slightly modified versions of the classical tests in regression analysis. This blog post introduces the basic idea.

Software

I’m a co-author of the selectiveInference package on CRAN.

Publications

X. Tian, J. R. Loftus, J. E. Taylor. Selective inference with unknown variance via the square-root LASSO. Biometrika, 2018. [link]

J. E. Taylor, J. R. Loftus, and R. J. Tibshirani. Inference in adaptive regression via the Kac-Rice formula. Annals of Statistics, 2016. [link]

J. R. Loftus. Selective inference after cross-validation. Preprint.

J. R. Loftus and J. E. Taylor. Selective inference in regression models with groups of variables. Preprint.

J. R. Loftus, J. E. Taylor. A significance test for forward stepwise model selection. Preprint.

Other publications

J. R. Loftus, Position: The Causal Revolution Needs Scientific Pragmatism. Proceedings of the 41st International Conference on Machine Learning, (ICML 2024). [link]

M. Fahrenwaldt, et al., Fairness: plurality, causality, and insurability, European Actuarial Journal, 2024. [link]

L. Rosenblatt, et al., Epistemic Parity: Reproducibility as an Evaluation Metric for Differential Privacy, Proceedings of the VLDB Endowment, (VLDB 2023). [link]

L. E. J. Bynum, et al., An Interactive Introduction to Causal Inference, IEEE Workshop on Visualization for AI Explainability, (VISxAI 2022). [link]

J. Xu, et al. Landscape of monoallelic DNA accessibility in mouse embryonic stem cells and neural progenitor cells. Nature Genetics, 2017. [link]